Focused on 3D user interface design for mixed reality applications, particularly in interdisciplinary fields, my work to date has addressed challenges in virtual reality (VR) and human-computer interaction (HCI), developing user interfaces for applications in virtual agents, healthcare, education, training, social behavior, and motion sickness. Building on this foundation, I am now shifting my research toward integrating artificial intelligence and multimodal sensing to enable more adaptive and intelligent virtual agents. My future work will explore how AI-driven models can enhance virtual agent capabilities by leveraging multimodal data—including speech, vision, and gesture—to create more natural, personalized, and context-aware interactions in immersive environments.

2019 - present: Ph.D., Computer Science, University of Minnesota, USA

2017 - 2019: M.S., Computer Science, University of Minnesota, USA

2013 - 2017: B.S., Information and Computing Science, Sun Yat-sen University, China

Research Projects

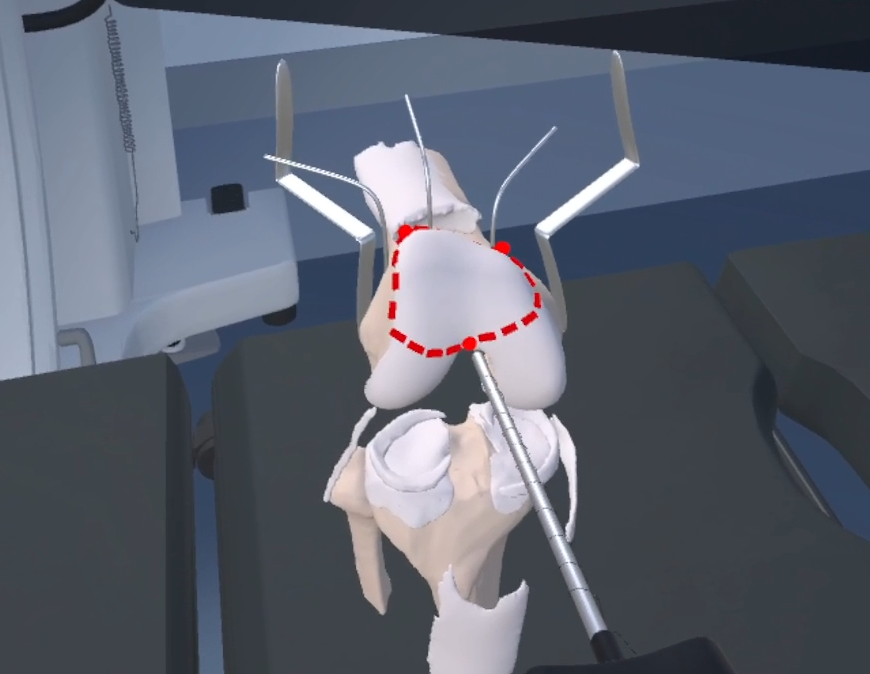

Knee Surgery in VR

This research aims to develop a virtual reality (VR) training system for knee surgery simulation. In collaboration with knee surgeons, we integrated an automatic pause module at each step, presenting relevant medical knowledge through videos while highlighting the appropriate instruments for interaction, enhanced by haptic feedback and visual cues. [Video]

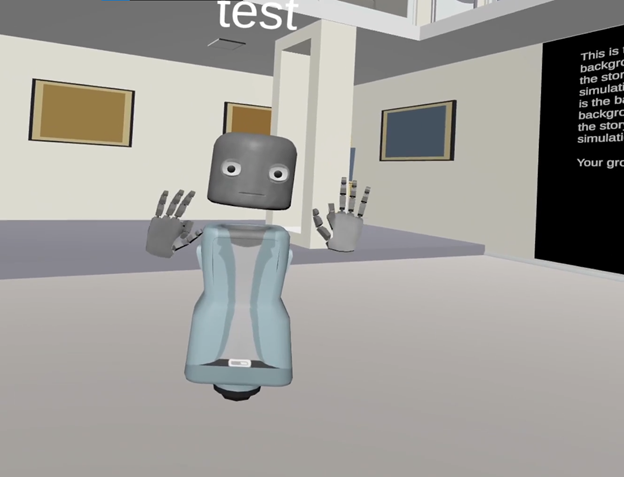

Nurse Training in Virtual Reality

This research aims to facilitate nurse students to improve technical knowledge and proficiency with objective of procedural skills training. The simulated scenarios for this project include chest pain treatment and oxygen therapy. Two user studies have been performed to evaluate the feasibility, user acceptance and effectiveness of using this customized simulation to evaluate nurse competency. [Scenario1, Scenario2]

Understanding Communication Technology and Social Behavior

This research is to compare and contrast the effects overtime of video conferencing versus VR in emergent supporting group processes and interactions that affect team performance, team processes and individual well-being and social need fulfillment. Sponsored by NSF.

[Video]

This research is to compare and contrast the effects overtime of video conferencing versus VR in emergent supporting group processes and interactions that affect team performance, team processes and individual well-being and social need fulfillment. Sponsored by NSF.

[Video]

Publications

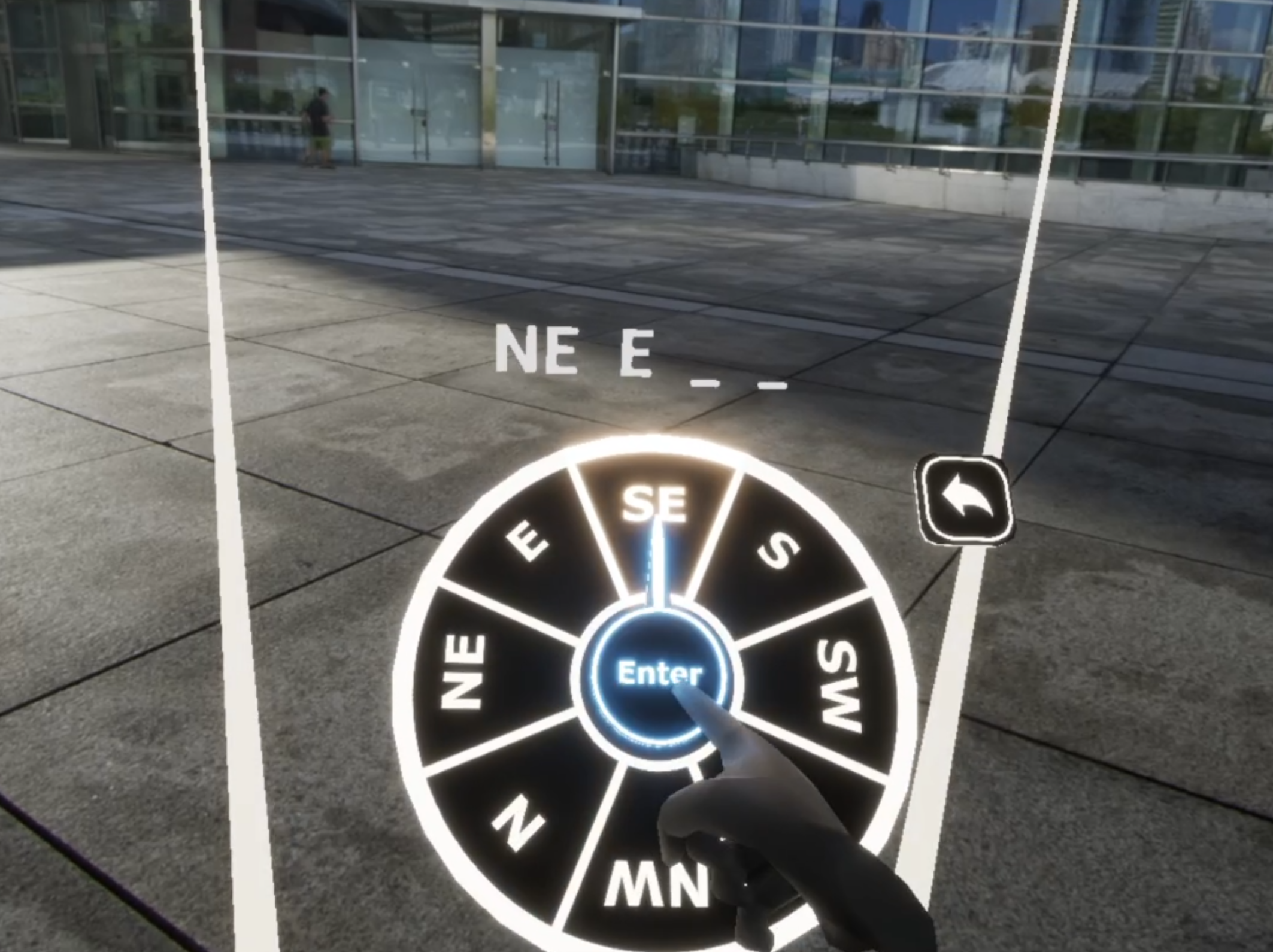

Y. Huang, D. Zhang and E. S. Rosenberg, “Direction-Based Authentication: Combining Symbolic Input and Contextual Cues for Virtual Reality Password Entry,” 2024 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Bellevue, WA, USA, 2024, pp. 681-689, doi: 10.1109/ISMAR62088.2024.00083. [Paper, Video]

Y. Huang, D. Zhang and E. S. Rosenberg, “DBA: Direction-Based Authentication in Virtual Reality,” 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 2023, pp. 953-954, doi: 10.1109/VRW58643.2023.00319.

[Paper, Video]

Y. Huang, D. Zhang and E. S. Rosenberg, “DBA: Direction-Based Authentication in Virtual Reality,” 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 2023, pp. 953-954, doi: 10.1109/VRW58643.2023.00319.

[Paper, Video]

D. Zhang et al., “COVID-Vision: A Virtual Reality Experience to Encourage Mindfulness of Social Distancing in Public Spaces,” 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 2021, pp. 697-698, doi: 10.1109/VRW52623.2021.00231.

[Paper, Video]

D. Zhang et al., “COVID-Vision: A Virtual Reality Experience to Encourage Mindfulness of Social Distancing in Public Spaces,” 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 2021, pp. 697-698, doi: 10.1109/VRW52623.2021.00231.

[Paper, Video]

Professional Services

Peer Reviewer:

- 2025 ACM CHI, ACM VRST, IEEE VR, IEEE ISMAR

- 2024 ACM CHI, ACM VRST, ACM SUI, IEEE VR, IEEE ISMAR

- 2023 UbiComp/ISWC - ISWC Notes and Briefs

Student volunteer:

- 2024 IEEE ISMAR

- 2024 IEEE VR

- 2020 IEEE VR